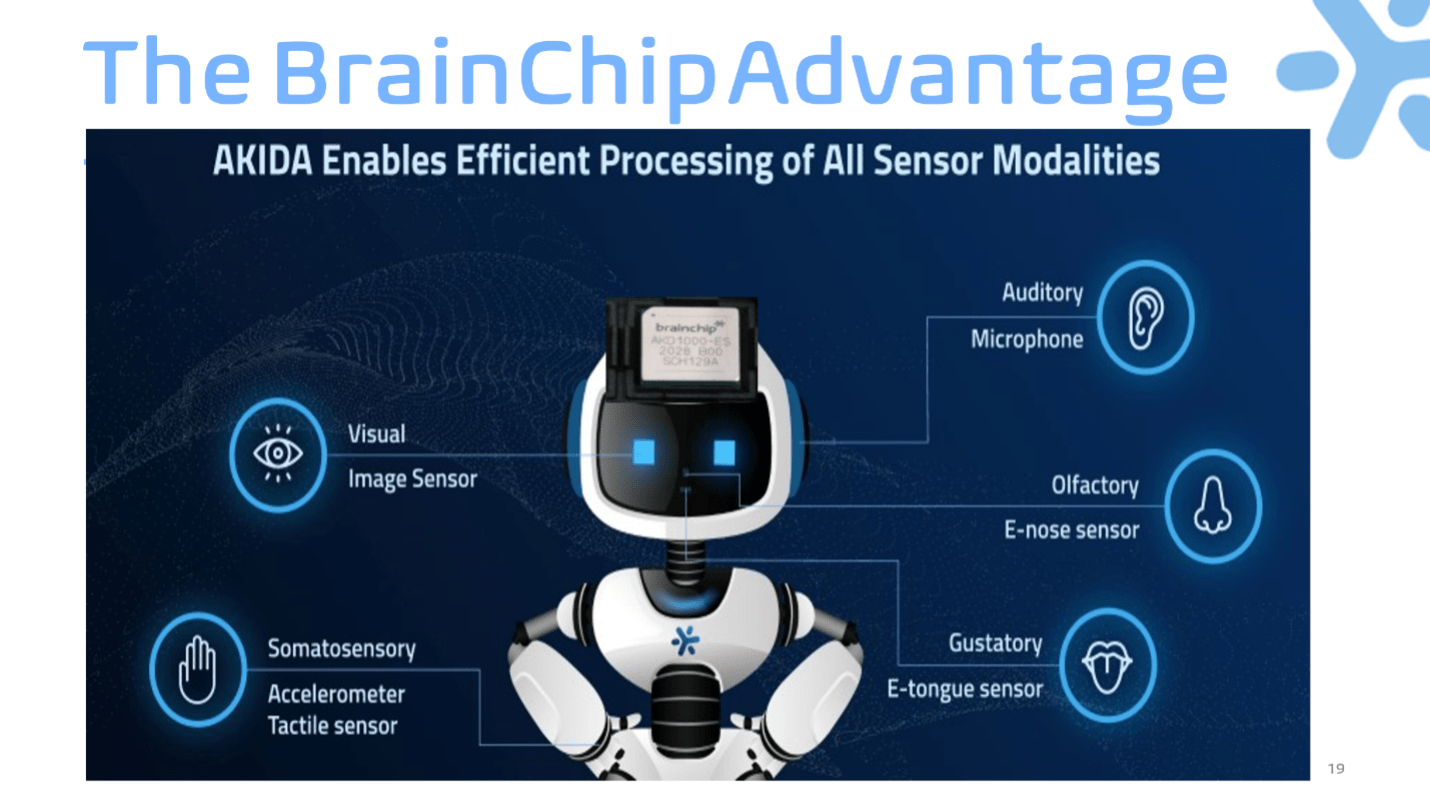

Real-World AI: Processing All Five Senses

As humans, we move about the planet relying heavily on our senses. These feelings help us to discern the world around us, keep us safe and simplify everyday tasks. In the world of Beneficial AI, some of the most exciting possibilities can be found in sensory applications. Sensory AI is learning through sensory inputs: information from the five human senses, vision, hearing, smell, taste, and touch.

Here are some ways that sensory AI modalities mimic the senses, and offer benefits in both new and current applications.

Sight

Seeing is believing, or so they say. In AI, vision is one of the most basic sensory applications, with image sensors performing object detection and recognition. AI vision is used in smart cameras to monitor and record images, facial recognition is used in security settings, and image classification is used in vehicle navigation.

Akida is ideal in smart camera systems used in areas in which power and high-speed connectivity are unavailable or unreliable. A smart camera integrating the Akida processor performs object recognition within the camera itself, without sending images to a back-end database for processing, so it doesn’t require connectivity.

Not only does visual data processing happen within the camera itself, so does learning. An Akida-equipped smart camera can be continuously trained to recognize new images as needed, without the heavy resource consumption of retraining as in conventional deep learning. This is of course more like how the biological brain learns. (See “The AI Revolution: Less Artificial, More Intelligent, and Beneficial to Society.”)

Drones equipped with visual sensors can do more than sightseeing, such as perform safety inspections and identify threats. In agriculture, a drone can monitor the growth of crops on a farm, and detect sub-optimal conditions. A drone outfitted with an Akida processor to “see” pests, diseases, and other problems can immediately alert farmers to take corrective action such as spray or adjust irrigation.

Sound

Ever get a song stuck in your head? Most of us have used apps like Shazam to identify a familiar song. This is a basic operation: take the audio snippet or lyric, match it to a database, and return the answer. Voice-operated devices also use a degree of sound recognition.

Like smart cameras identify sights, smart microphones are trained to identify sounds. Acoustic monitoring is often used to identify the health and performance of a system – it “listens” for sounds that indicate an impending problem. In industrial automation and robotics, a microphone with its own Akida processor can monitor noise, make decisions, and trigger action based on what it hears, such as stopping a machine and signaling for repair.

In sensor fusion, inputs of different types are combined. Audio and visual information from separate sensors give even more information about the overall health of a machine. Robotic automation, voice, and image sensors can also be combined as voice and gesture recognition to help people with disabilities perform new tasks and navigate their homes, and the world, in new ways.

Smell

Olfactory analysis is a massive breakthrough in the healthcare industry. With every breath, our bodies expel carbon dioxide plus other chemical compounds that have been associated with various diseases. Breath sensor data is being used to identify conditions such as Parkinson’s, several different kinds of cancers, kidney failure, multiple sclerosis and more. It even shows promise in diagnosing whether a tumor is benign or malignant. (And it can definitely tell you if you need a breath mint.)

When used in breathalyzer-type devices, Akida-equipped olfactory sensors perform efficient and accurate detection of more than 100 chemical compounds to help in diagnosis. Because Akida is a complete neural processor that does not require an external CPU or memory, and because its electrical power requirements are low, it can be used in inexpensive hand-held diagnostic testing tools. Localized AI processing on the device also means no complex communication with the cloud is required, so devices can even be used in remote areas. This type of technology has the potential to detect and control outbreaks of diseases and viruses like malaria or ebola that are deadly in many regions of the world.

Taste

Even a wine aficionado with a palate so refined they can tell the difference between French and American oak can’t match the capabilities of an “electronic tongue.” Much like olfactory molecules, gustatory molecules can be measured and analyzed with AI. The food industry can use the technology for food safety and freshness, and to improve and automate the food supply system from processing and production to distribution and retailing. Food loss, spoilage, and waste is a worldwide problem, and interruptions due to the recent global pandemic only magnified the need for advanced solutions.

E-tongues are also used in the pharmaceutical industry in formulating medicines – for ethical or health reasons, human taste testing may not be acceptable or appropriate. There are other key reasons an e-tongue is superior to human taste: they are immune to subjectivity, they don’t get tired, there is no risk when tasting toxic or contaminated samples, and they can be custom-configured for precise sensitivity. Taste-sensing AI devices with Akida processors can continuously learn and re-learn based on new information detected.

Touch

Some scientists say our sense of touch develops before any others. The skin of our entire bodies are full of different receptors tuned into different sensations.

Touch perception and analysis offers a surprising array of applications. The perception of heat and cold, moisture, pressure, texture, and other sensations can drastically improve the way machines interact with their surroundings and deliver more efficient and effective systems in nearly any environment.

Human/machine interfaces and prosthetic devices are ripe for AI innovation with somatosensory perception. Touch-enabled AI devices can be used in underwater or underground exploration in spaces a human can’t squeeze into. An autonomous vehicle may use touch sensors to adjust how it drives on snowy or wet streets. Akida processors use touch to perform vibrational analysis of the integrity of infrastructure, such as bridges or roads, and identify potential problems as they age. And again, sensory fusion combining touch with visual and/or audio data processing promises even more precise information.

Using AI to perform sensory tasks has been possible to some extent for years. However, there are clear ways Akida improves sensory data processing – such as improved intelligence and continuous learning, and the ability to process directly on edge devices instead of merely recording data and transmitting it to a data center.

BrainChip: This is our Mission.