See what they’re saying:

“At Prophesee, we are driven by the pursuit of groundbreaking innovation addressing event-based vision solutions. Combining our highly efficient neuromorphic-enabled Metavision sensing approach with Brainchip’s Akida neuromorphic processor holds great potential for developers of high-performance, low-power Edge AI applications. We value our partnership with BrainChip and look forward to getting started with their 2nd generation Akida platform, supporting vision transformers and TENNs,” said Luca Verre, Co-Founder and CEO at Prophesee.

“BrainChip and its unique digital neuromorphic IP have been part of IFS’ Accelerator IP Alliance ecosystem since 2022,” said Suk Lee, Vice President of Design Ecosystem Development at IFS. “We are keen to see how the capabilities in Akida’s latest generation offerings enable more compelling AI use cases at the edge”

“Edge Impulse is thrilled to collaborate with BrainChip and harness their groundbreaking neuromorphic technology. Akida’s 2nd generation platform adds TENNs and Vision Transformers to a strong neuromorphic foundation. That’s going to accelerate the demand for intelligent solutions. Our growing partnership is a testament to the immense potential of combining Edge Impulse’s advanced machine learning capabilities with BrainChip’s innovative approach to computing. Together, we’re forging a path toward a more intelligent and efficient future,” said Zach Shelby, Co-Founder and CEO at Edge Impulse.

“BrainChip has some exciting upcoming news and developments underway,” said Daniel Mandell, Director at VDC Research. “Their 2nd generation Akida platform provides direct support for the intelligence chip market, which is exploding. IoT market opportunities are driving rapid change in our global technology ecosystem, and BrainChip will help us get there.”

“Integration of AI Accelerators, such as BrainChip’s Akida technology, has application for high-performance RF, including spectrum monitoring, low-latency links, distributed networking, AESA radar, and 5G base stations,” said John Shanton, CEO of Ipsolon Research, a leader in small form factor, low power SDR technology.

“Through our collaboration with BrainChip, we are enabling the combination of SiFive’s RISC-V processor IP portfolio and BrainChip’s 2nd generation Akida neuromorophic IP to provide a power-efficient, high capability solution for AI processing on the Edge,” said Phil Dworsky, Global Head of Strategic Alliances at SiFive. “Deeply embedded applications can benefit from the combination of compact SiFive Essential™ processors with BrainChip’s Akida-E, efficient processors; more complex applications including object detection, robotics, and more can take advantage of SiFive X280 Intelligence™ AI Dataflow Processors tightly integrated with BrainChip’s Akida-S or Akida-P neural processors.”

“Ai Labs is excited about the introduction of BrainChip’s 2nd generation Akida neuromorphic IP, which will support vision transformers and TENNs. This will enable high-end vision and multi-sensory capability devices to scale rapidly. Together, Ai Labs and BrainChip will support our customers’ needs to address complex problems,” said Bhasker Rao, Founder of Ai Labs. “Improving development and deployment for industries such as manufacturing, oil and gas, power generation, and water treatment, preventing costly failures and reducing machine downtime.”

“We see an increasing demand for real-time, on-device, intelligence in AI applications powered by our MCUs and the need to make sensors smarter for industrial and IoT devices,” said Roger Wendelken, Senior Vice President in Renesas’ IoT and Infrastructure Business Unit. “We licensed Akida neural processors because of their unique neuromorphic approach to bring hyper-efficient acceleration for today’s mainstream AI models at the edge. With the addition of advanced temporal convolution and vision transformers, we can see how low-power MCUs can revolutionize vision, perception, and predictive applications in a wide variety of markets like industrial and consumer IoT and personalized healthcare, just to name a few.”

“We see a growing number of predictive industrial (including HVAC, motor control) or automotive (including fleet maintenance), building automation, remote digital health equipment and other AIoT applications use complex models with minimal impact to product BOM and need faster real-time performance at the Edge” said Nalin Balan, Head of Business Development at Reality ai, a Renesas company. “BrainChip’s ability to efficiently handle streaming high frequency signal data, vision, and other advanced models at the edge can radically improve scale and timely delivery of intelligent services.”

“Advancements in AI require parallel advancements in on-device learning capabilities while simultaneously overcoming the challenges of efficiency, scalability, and latency,” said Richard Wawrzyniak, Principal Analyst at Semico Research. “BrainChip has demonstrated the ability to create a truly intelligent edge with Akida and moves the needle even more, in terms of how Edge AI solutions are developed and deployed. The benefits of on-chip AI from a performance and cost perspective are hard to deny.”

“BrainChip’s cutting-edge neuromorphic technology is paving the way for the future of artificial intelligence, and Drexel University recognizes its immense potential to revolutionize numerous industries. We have experienced that neuromorphic compute is easy to use and addresses real-world applications today. We are proud to partner with BrainChip and advancing their groundbreaking technology, including TENNS and how it handles time series data, which is the basis to address a lot of complex problems and unlocking its full potential for the betterment of society,” said Anup Das, Associate Professor and Nagarajan Kandasamy, Interim Department Head of Electrical and Computer Engineering, Drexel University.

“Our customers wanted us to enable expanded predictive intelligence, target tracking, object detection, scene segmentation, and advanced vision capabilities. This new generation of Akida allows designers and developers to do things that were not possible before in a low-power edge device,” said Sean Hehir, BrainChip CEO. “By inferring and learning from raw sensor data, removing the need for digital signal pre-processing, we take a substantial step toward providing a cloudless Edge AI experience.”

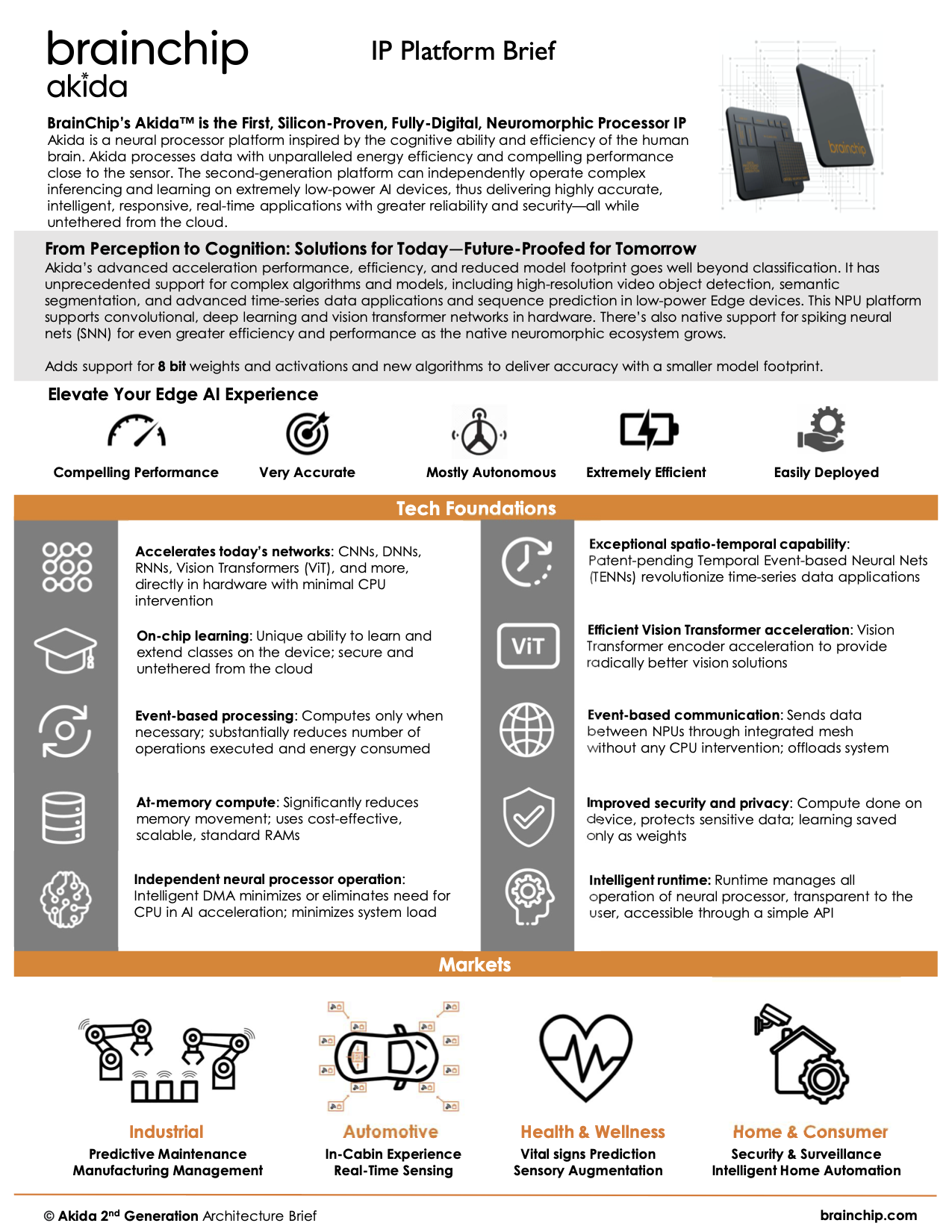

Solutions for Today, Future-Proofed for Tomorrow

BrainChip’s vision is to make AI ubiquitous through innovation that accelerates personalized artificial intelligence everywhere.

Hence, Akida technology is inspired by the brain, the most efficient cognitive “processor” that we know of. It is the result of more than 15 years of AI architecture research and development by BrainChip co-founders – Peter Van der Made (CTO) and Anil Mankar (CDO) along with their team of neuromorphic experts.

The second-generation platform can independently operate complex inferencing and learning on extremely low-power AI devices delivering highly accurate, intelligent, responsive, real-time applications with greater reliability and security.

Akida now supports more complex algorithms and models, including high-resolution video object detection, segmentation and advanced time-series data applications, sequence prediction, and advanced speech recognition in low-power edge devices. This architecture also supports all convolutional, deep learning, and vision transformer networks and includes native support for spiking neural nets (SNN) with even greater efficiency and performance.

Akida 2nd Generation Platform Brief

Akida 2nd Generation Platform Brief

The 2nd generation Akida builds on the existing technology foundation and supercharges the processing of raw time-continuous streaming data, such as video analytics, target tracking, audio classification, analysis of health monitoring data such as heart rate and respiratory rate for vital signs prediction, and time series analytics used in forecasting, and predictive production line maintenance. These capabilities are critically needed in industrial, automotive, digital health, smart home, and smart city applications.

Availability:

Engaging with lead adopters now. General availability in Q3’2023

Key Benefits:

Akida strives to continually provide the benefits of neuromorphic processing to AI processing at the edge. It does so by accelerating today’s convolutional networks, future Spiking neural networks and introducing new algorithms that can revolutionize efficient intelligence.

Add more intelligence to manage complex networks directly in hardware. Akida extends it’s ability to process multiple layers of the network simultaneously with the support for long range skip connections. This allows more complex networks like RESNET-50 to be processed directly by Akida without CPU intervention. This makes the execution faster. The support for 8-bit weights and activations to go with the existing 4,2,1 bit support helps add broader coverage.

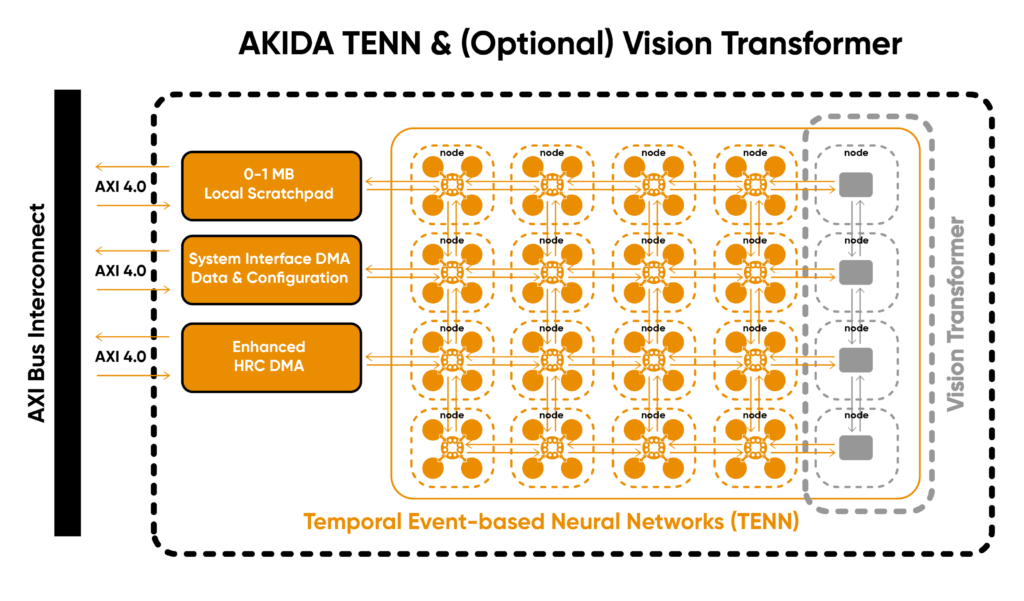

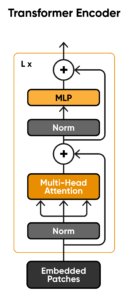

Edge devices are demanding much more effective, higher resolution vision solutions in very low power envelopes. Akida adds a very efficient vision transformer implementation that accelerates patch and position encoding as well as the main encoder block. Hardware acceleration of ViT encoder allows to reduce computational complexity of Vision Transformer implementations and enables better latency of hybrid solution that deploy a combination of ViT with CNN solutions to get the best of both.

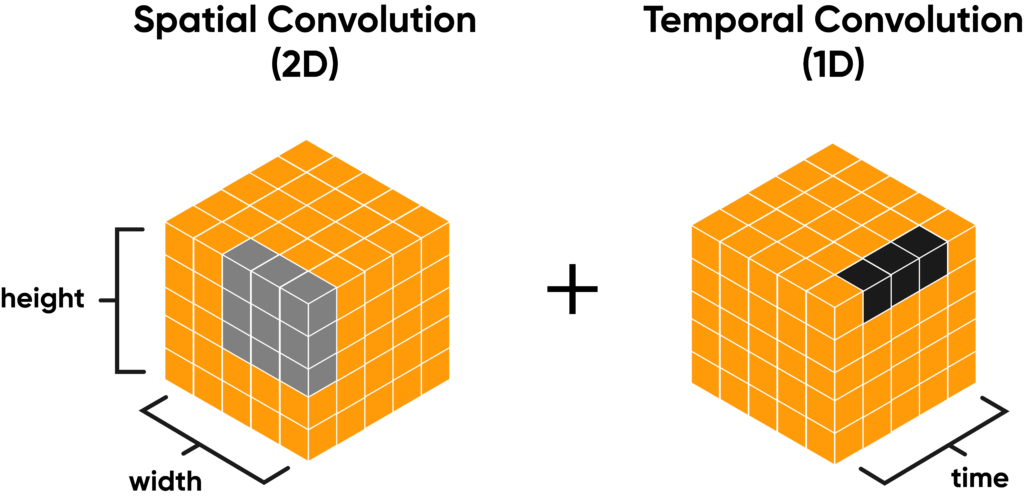

Akida now is much more efficient at handling time series data. A lot of interesting new use cases and models need efficient handling of 3D data (2D – frame + 1D-time) for applications like video object detection and target tracking. Akida also helps in 1D time series data for interesting applications in streaming audio or healthcare data. Akida’s addition of separable spatial temporal convolutions which we call Temporal Event Based Neural Nets (TENNs) allow for efficient processing for these types of models for radically simpler solutions.

A key challenge with edge devices is trading off the accuracy with the size of the model which are directly related. For greater accuracy and greater capability, models are growing in size.

Akida’s ability to quantize at 8,4,2,1 bits help to reduce model size while maintaining accuracy. However, the 2nd generation of Akida adds a new network algorithm Temporal Event Based Neural Nets (TENNs) that can radically improve efficiency and performance while delivering desired accuracy.

Architecture and Notable Features

Skip connections:

The Akida neural processor has the ability to handle multiple layers simultaneously which is an important feature. It now adds support for short or long-range skip connections in the neural mesh or in the local scratchpad. Skip connections reduce degradation (ResNet) or future usability (DenseNet) of the network and are usually handled by the host CPU, and are now handled directly by the Akida neural processor substantially reducing latency and complexity. So networks like ResNet50 are now completely handled in the neural processor without CPU intervention.

Vision transformers provide a performance boost:

The Vision Transformer, or ViT, is a model for image classification that employs a Transformer-like architecture over patches of the image. An image is split into fixed-size patches, each of them are then linearly embedded, position embeddings are added, and the resulting sequence of vectors is fed to a standard Transformer encoder. In order to perform classification, the standard approach of adding an extra learnable “classification token” to the sequence is used. Akida now supports patch and position embeddings and the encoder block in hardware.

Spatial Temporal convolutions:

Akida’s 2nd generation supports extremely efficient 3D convolutions.

This capability simplifies and accelerates the handling of 3D data such as video data, so applications like video object detection and target tracking.

Temporal Event-Based Neural Nets:

Temporal Event-based Neural Networks (TENNs) are lightweight, energy-efficient neural networks developed by Brainchip that excel at processing temporal data. TENNs offer state-of-the-art performance using significantly fewer parameters and address the low-power requirements of Edge based devices that need to support larger workloads.

TENNs process many types of temporal data including one-dimensional time series from microphones, vitals from health monitors, spatio-temporal events from dynamic vision sensors, live video from traditional cameras, and many others, allowing developers flexibility in performing compute-heavy tasks on devices with limited memory, battery, and compute resources.

One IP Platform, Multiple Configurable Products

The 2nd generation IP platform will support a very wide range of market verticals and will be delivered in three classes of product.

Akida-E: Extremely energy-efficient, for always on operation very close to, or at sensors.

Akida-S: Integration into MCU or other general purpose platforms that are used in broad variety of sensor-related applications

Akida-P: Mid-range to higher end configurations with optional vision transformers for ground breaking and yet efficient performance.

Key Benefits:

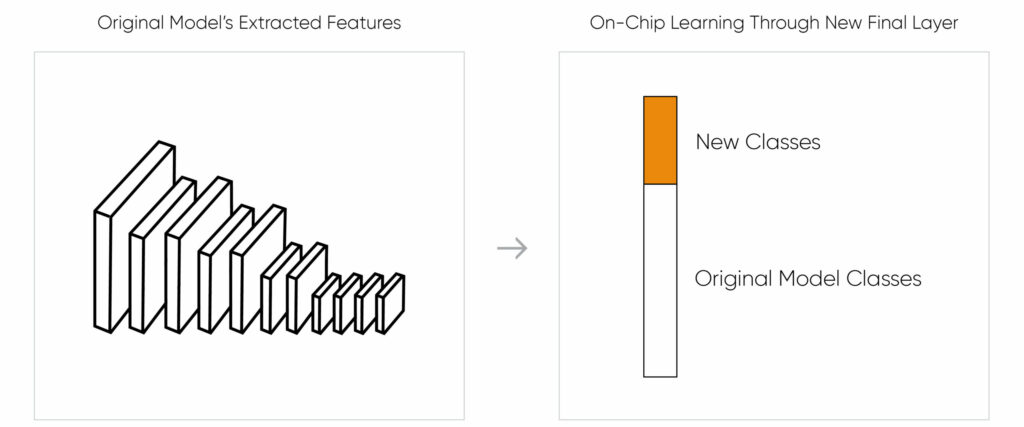

- This Customization and Learning is simple to enable and is untethered from the cloud.

- Models can adapt to changes in field and the AI Application can implement incremental learning without costly cloud model retraining.

- It adds Security and Privacy as the Input data isn’t saved. It is only stored as weights

Demonstrated Edge Learning For:

- Object detection with MobileNet trained on the ImageNet dataset.

- Keyword spotting with DS-CNN trained on the Google Speech Commands dataset.

- Hand gesture classification with a small CNN trained on a custom DVS events dataset

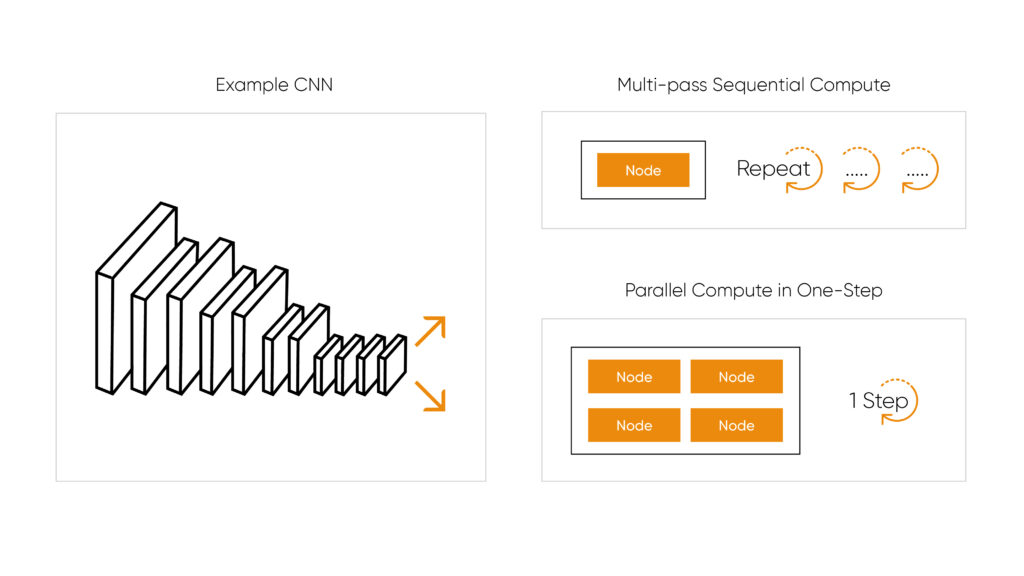

Multi-Pass Processing Delivers Scalability

Akida leverages multi-pass processing to reduce the number of neural processing units required for a given compute task by segmenting and processing sequentially.

Since Akida can do multiple layers at a time, and the DMA handles this loading independent of the CPU, it substantially reduces the additional latency of going from parallel to sequential processing versus traditional DLAs where the layer processing is managed by CPU.

Key Benefits:

- Extremely scalable as it runs larger networks on given set of Nodes thereby reducing Silicon footprint and Power in SoC

- Transparent to Application developer/ user as it is handled by Runtime SW

- Provides future proofing since you can scale Today’s designs for tomorrow’s models