Performance Where It Matters Most

Smarter AI at the Edge Starts with Sparsity

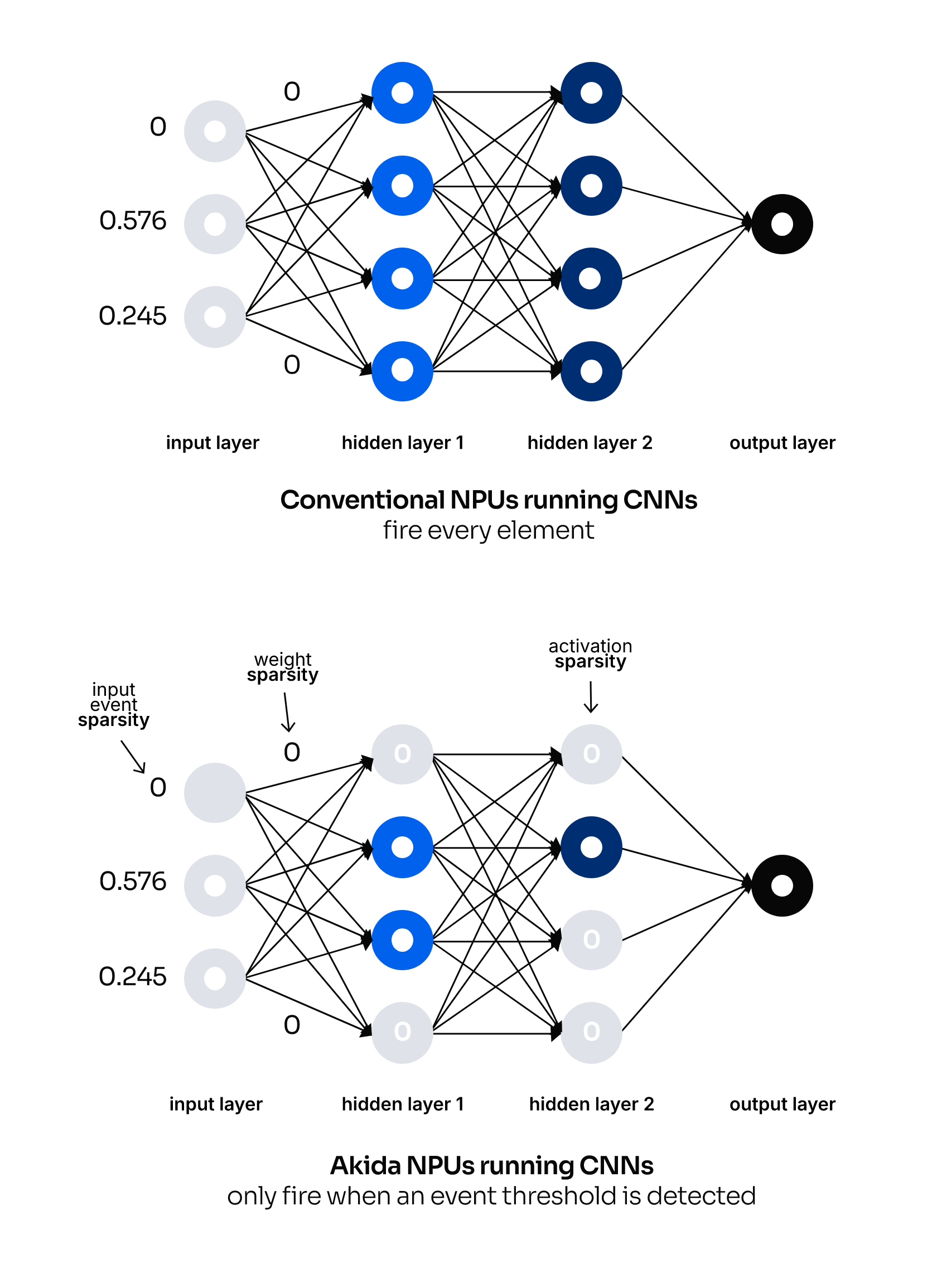

AkidaTM is built on the principle of sparsity, the idea that accurate AI results come from processing only the most meaningful data. By focusing only on what’s necessary, Akida enables longer battery life, smaller and cooler devices, and faster responses for real-time applications.

Akida NPUs running CNNs

only fire when an event threshold is detected

Less to Process, More Efficiency to Gain

Akida achieves efficiency at every level of the AI pipeline by reducing data, weights, and activations that don’t contribute meaningful information.

Sparse

Data

Streaming inputs are converted to events at the hardware level, reducing the volume of data by up to 10x before processing begins.

Sparse

Weights

Unnecessary weights are pruned and compressed, reducing model size and compute demand by up to 10x.

Sparse

Activations

Only essential activation functions pass data to the next layers, cutting downstream computation by up to 10x.

Traditional CNNs activate every neural layer at every timestep and can consume watts of power to process full data streams, even when nothing changes.

Akida takes a different approach, processing only meaningful information. This enables real-time streaming AI that runs continuously on milliwatts of power, making it possible to deploy always-on intelligence in wearables, sensors, and other battery-powered devices.

- Sparse Data – Traditional CNNs process every incoming data point, regardless of change. Akida filters input at the hardware level and reacts only to new or relevant information.

- Sparse Weights – Conventional models carry millions of weights, including many with little impact. Akida prunes these during training, reducing memory and compute requirements.

- Sparse Activations – Most CNNs pass all activations forward. Akida activates only when output crosses a threshold, avoiding unnecessary computation.

Engineered from Inception for Embedded AI Efficiency

Akida’s architecture is purpose-built for event-driven workloads. Everything is optimized to do more with less.

Process Only When Needed

Computation runs only when an event needs to be processed, reducing energy and workload.

Communicate Essentials

Neural processing nodes share data only when it’s needed, avoiding power-hungry communication overhead

Keep Data Close to Compute

Memory is distributed and placed near compute nodes to reduce latency and power draw.

Focus on Development, Not Overhead

The intelligent runtime manages everything behind the scenes, transparent to users and accessible through a simple API.

Learn and Adapt on Device

Akida uniquely supports on-chip learning, allowing devices to personalize and adapt without the cloud.

Reduce CPU Load

An intelligent DMA reduces or eliminates the need for a CPU, lightening the system’s processing load.

Fully Digital and Proven in Silicon

Akida’s fully digital design is scalable, portable, and already running in production hardware.

Built-in Privacy

Your data is private because compute is performed locally, and only weights are saved for learning.

Built for Your Models

Akida supports CNNs, DNNs, RNNs, and more. Use MetaTFTM to convert and optimize for sparse compute.

Deploy in the Real World

Prototype using Akida hardware, FPGAs, or simulations. Test models real-time on streaming data.

Smaller Models. Better Accuracy. Smarter Design.

The BrainChipTM model strategy is built for performance, efficiency, and real-world deployment. We take advantage of state space models with temporal knowledge to reduce model size and compute requirements while providing better results than conventional models.

Supported Architectures

CNNs and Spatio-Temporal CNNs

Optimized for spatial and time-aware tasks like image recognition, gesture detection, and vibration analysis.

State Space Models (SSMs)

A new class of neural networks that combine temporal awareness with training efficiency. SSMs outperform traditional RNNs like LSTMs and GRUs in scalability and training speed.

Temporal Event-Based Neural Networks (TENNsTM)

TENNs is ideal for “anything with movement” as it keeps track of streaming data across time. TENNs enhance state space models with event based awareness to simplify motion tracking, object detection, and audio processing—using less memory and fewer computations than transformers.

How Our Neural Networks

Improve on Traditional Designs

This Approach Isn’t New to Us

With over 15 years of AI architecture research and development, BrainChip was founded on a vision inspired by nature’s most efficient processor, the brain. Akida brings that vision to life by combining models,

tools, and hardware to deliver super sparsity compute for real-time AI at the edge.