Cutting-edge AI Processors and Development Tools.

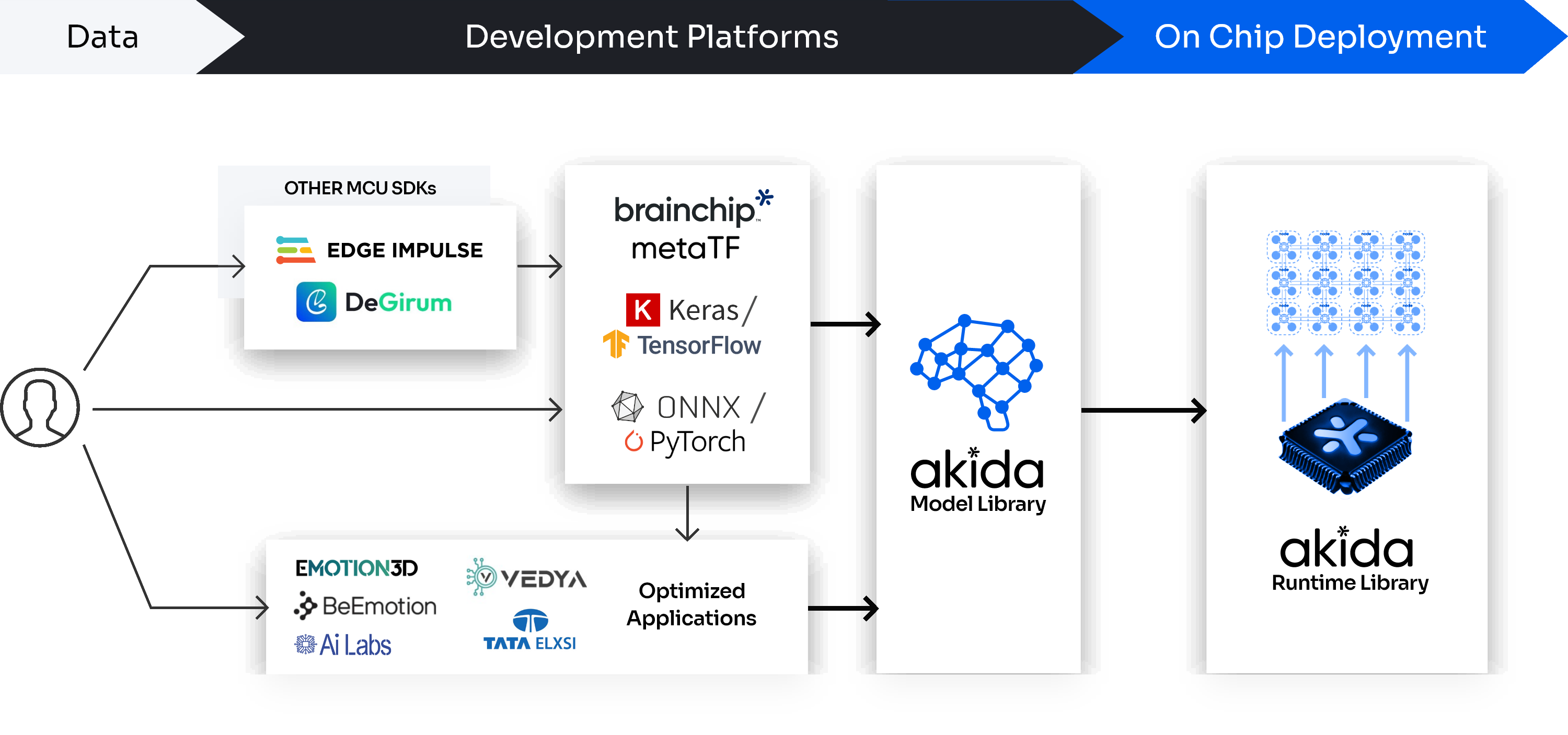

BrainChipTM offers a full suite of Akida development tools for building Edge AI solutions, centered around the MetaTFTM software environment and supported by hardware platforms like boards, boxes, and FPGAs.

MetaTF Software

Development Tools

MetaTF is a powerful developer environment designed to support edge AI innovation. MetaTF is the bridge between developers and BrainChip’s Akida technology, lowering the barrier to entry and providing clear, accessible pathways to start building with Akida.

Designed for engineers building Edge AI applications, the Akida Development Environment (MetaTF) is a complete machine learning framework for creating, training, testing, and deploying neural networks on the Akida Neuromorphic Processor Platform. MetaTF includes a processor IP simulator for model execution, as well as support for Akida hardware like the AKD1000 reference SoC and Akida 2 FPGA platform.

Inspired by the Keras API, MetaTF provides a high-level Python API for neural networks. This API facilitates early evaluation, design, final tuning, and productization of neural network models.

MetaTF is comprised of four Python packages which leverage the TensorFlow framework and are installed from the PyPI repository via pip command.

The Four MetaTF Packages Contain

Model Zoo (akida-models)

to directly load quantized models or to easily instantiate and train Akida compatible models

Quantization Tool (Quantize-models)

for quantization of models using low-bitwidth weights and outputs

Conversion Tool (cnn2snn)

to convert models to a binary format for model execution on an Akida platform

Interface to the Akida Neuromorphic Processor (akida)

including a runtime, a Hardware Abstraction Layer (HAL) and a software backend.

It allows the simulation of the Akida Neuromorphic Processor and use of the Akida hardware Development Platforms.

Cloud Tools

AKIDA Cloud

Akida Cloud is a service that provides a pre-configured environment for system and chip designers to evaluate the efficiency and performance of neural models on remotely hosted BrainChip Akida IP. It’s a platform for demonstrating, emulating, and validating Akida IP easily and efficiently.

Hardware Development Tools

AKD1000 PCIe Development Board

The AKD1000 PCIe Development Board is a compact, high-performance platform designed to accelerate Edge AI development with BrainChip’s Akida technology. Featuring the AKD1000 neuromorphic processor in a standard PCIe form factor, it enables seamless integration into existing systems for efficient prototyping, testing, and deployment of AI applications at the edge.

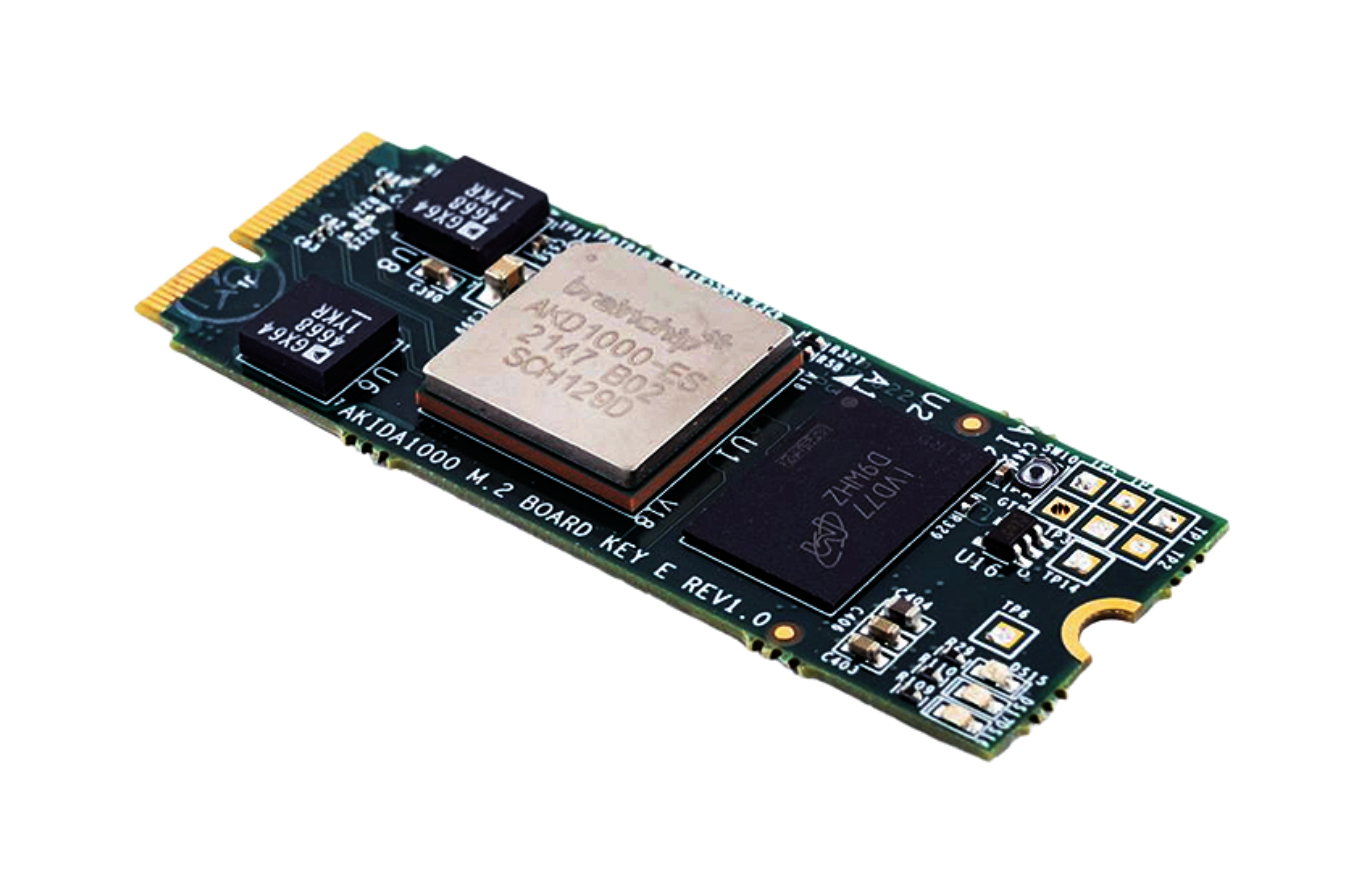

AKD1000 M.2 Development Card

Akida M.2 AKD1000 Card accelerates CNN-based neural network models using BrainChip’s ultra energy-efficient, and purely digital, event-based processing architecture. Akida AKD1000 features built in capabilities to execute these networks without a host CPU, enabling stand-alone operation for always on ultra low power. BrainChip’s unique MetaTF software flow enables developers to compile and optimize their chosen models for the Akida M.2 Card.

The Akida M.2 AKD1000 can be paired with existing Single-Board Computers to enable AI applications. It’s low power consumption supports the creation of very compact, ultra-low-power, portable and intelligent devices for wearables, remote sensors, always on wake-up devices, Healthcare, Consumer, Smart Home and AIoT applications. The Akida Runtime software manages network processing to fully utilize available resources and can automatically partition execution into multiple passes.

AKIDA 1 Edge AI Box

BrainChip and VVDN collaborated to create the innovative and powerful Akida Edge AI Box. This collaboration leverages BrainChip’s AKD1000 AI Accelerator and NXP i.MX 8M Plus SoC to produce a compact system with the capability to execute diverse AI applications at the edge. Applications such as video analytics, face recognition, and object detection are among the many use cases supported by this solution. This design is a turnkey end product that can be customized by our partner VVDN for volume edge AI use cases.

AKIDA FPGA Development Platform

The Akida FPGA Platform is a hardware product for loading Akida IP configurations and neural models to evaluate the model performance and execution on BrainChip Akida IP. It provides system and chip designers with a pre-configured environment for demonstration, emulation, validation, and system integration. The platform showcases BrainChip’s Akida AI neural processing acceleration, which is scalable, configurable, and programmable to support CNN and Temporal Event-Based Neural Network models (TENNs).

AKD1000 System on Chip

The Akida AKD1000 System-on-Chip (SoC) is the first in a new breed of event domain neural processing devices. Integrated on a pure digital 28nm logic process, this event-based neural processor is inherently lower power than traditional deep learning accelerators. Perform incremental and 1-shot learning on chip for applications where personalization, privacy and security are essential. When using the unique BrainChip Akida Development Environment flow, standard neural networks are converted to run on the Akida event domain processor with very low power consumption and high throughput.

The Akida AKD1000 contains all the needed interfaces and data-to-event converters for embedded systems or can be scaled to 32 devices for edge computing applications.

Key Benefits

- Designed for Low-Power Neural Network Processing

• Highly efficient images/second per watt with superior accuracy - On-Chip Learning

• Enables customization - Industry Standard Development Environment

• TensorFlow and Keras APIs

Specifications

- ARM Cortex M4 On-Chip Processor

- FPU and DSP for pre/post-processing of data

- Reliable 28nm CMOS digital logic process

- Small FCBGA package

Akida Neuron Fabric

- Array of 20 Neural Processing Cores

- 2560 4×4 bit MACs @300MHz for 1.5 TOPS

- Interconnect network for spike transmission

AKIDA GenAI FPGA Development Platform

The Akida GenAI FPGA is a hardware development target to install and exercise Akida GenAI IP core configurations to execute neural models for validation and system integration for system and chip designers. The Akida GenAI FPGA platform supports BrainChip’s GenAI AI neural processing acceleration that is scalable, configurable and programmable to support TENNsTM and State-Space neural network models for Large Language Models.

Request Access

Be among the first to access BrainChip’s advanced AkidaNet/TENNs models, built for efficient, low-power AI at the edge. Models include audio denoising, automatic speech recognition, and language models—including a compact LLM and LLM with RAG for intelligent, real-time applications.