Vision Transformers

Vision Transformers (ViTs) – Their Popularity And Unique Architecture

In today’s world, computer vision has become essential for solving various problems. For instance, we use it to:

However, traditional computer vision techniques like Convolutional Neural Networks (CNNs) have some limitations when analyzing images.

CNNs work by passing images through a series of convolutional and pooling layers to extract relevant features. However, as images become larger and more complex, CNNs become less effective. This is where Vision Transformers come in.

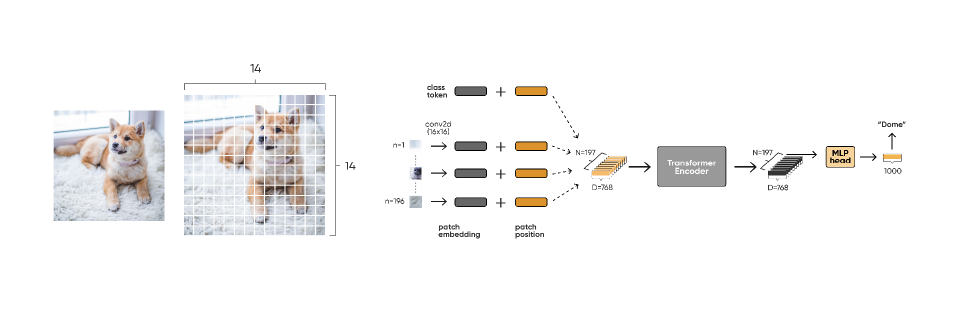

Vision Transformers, or ViTs, are a type of deep learning model that uses self-attention mechanisms to process visual data. They were introduced in a paper by Dosovitskiy in 2020 and have since gained popularity in computer vision research.

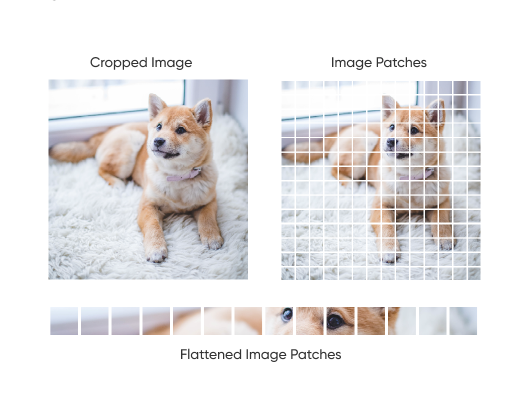

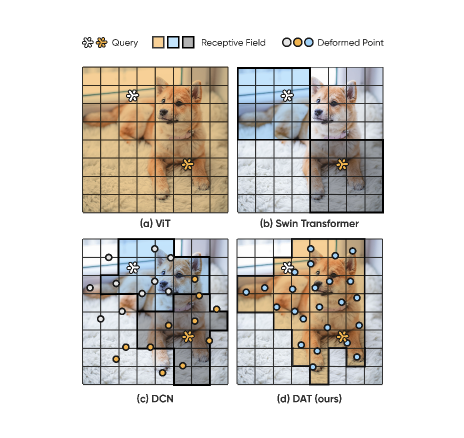

In a Vision Transformer, an image is first divided into patches, which are then flattened and fed into a multi-layer transformer network. The self-attention mechanism allows the model to attend to different parts of the image at different scales, enabling it to simultaneously capture global and local features. The transformer’s output is passed through a final classification layer to obtain the predicted class label.

The result is a Vision Transformer with self-attention mechanisms that allow the model to focus on different parts of the input image in the spatial dimension.

ViTs show higher accuracy with object classification tasks compared to CNNs.

Due to self-attention used in ViTs they are able to learn features from spatially related regions and becomes very suitable for object detection, tracking and segmentation related solutions. They also have better generalization when trained on a distributed dataset.

This is because transformers utilize multiheaded attention, which is a novel way to extract meaning from sequences of words.

When considering vision transformers, the input to the network is no longer a sequence of words, but frames that have meaning within the images, and across several images that arrive in the form of a video. Vision transformers use multiheaded attention to pay attention to specific things inside of an image.

Vision transformers are popular for tasks like

Enabled by Akida, Vision Transformers can be applied remotely at low power consumption to serve multiple industries.

- In the field of autonomous vehicles, they can be used for driver assistance and monitoring, alertness, lane assistance.

- In agriculture, they can be used to monitor crop health, identify diseases or pests, and estimate yield.

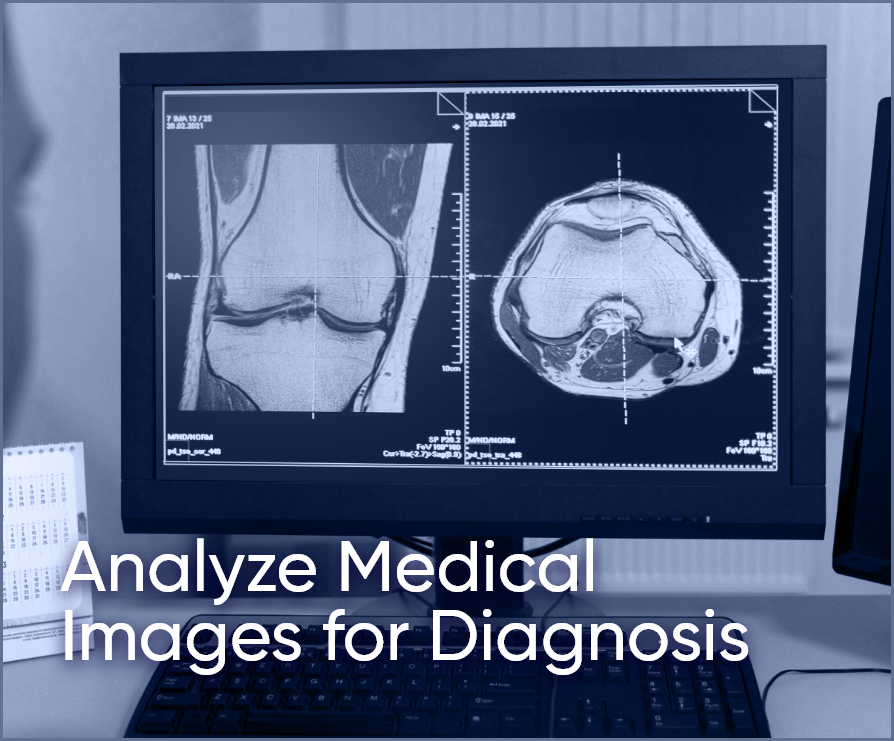

- In healthcare, they can help analyze medical images to aid diagnosis.

- In manufacturing, they can automate quality control processes.

- In smart devices, they can be used for user attention, pose estimation, scene understanding, etc.