Developing CNNs for Neuromorphic Hardware

(Been There! Done That!)

By Nikunj Kotecha

Often, we hear that neuromorphic technology is cool, classy, low power, next-gen hardware for AI and the most suitable technology for edge devices. Neuromorphic technology mimics the brain, the most efficient computation engine known, to create a computing and natural learning paradigm for devices. Neuromorphic design is complemented with Spiking Neural Networks (SNNs) which emulate how neurons fire and hence only compute when absolutely necessary. This is unlike today’s “MAC monsters" engines -ones that execute lots of MACs (Multiply Accumulate operations which are the basis of most AI computation) in parallel, many of which often get discarded.

So neuromorphic hardware is exciting! However, it is very difficult to develop and deploy current state-of-the-art solutions onto neuromorphic hardware. It’s also extremely limiting to use existing convolutional neural networks (CNNs) based on these platform models. The difficulty primarily stems from the assumption that neuromorphic hardware is analog, and they only run advanced algorithms with SNNs, which are currently in short supply. Therefore, the production models of today —typically accelerated by traditional Deep Learning Accelerators (DLAs) as a safe path to commercialization—are not supported by neuromorphic hardware. But BrainChip Akida TM is changing the game.

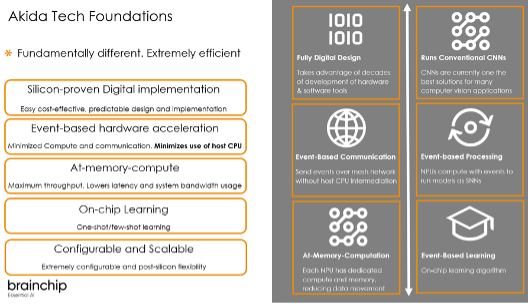

Akida is a fully digital, synthesizable and thus process-independent, and silicon-proven neuromorphic technology. It is designed to be scalable and portable across foundries and architected to be embedded into low-power edge devices. It fully supports acceleration for feed-forward CNNs and accelerates other neural networks like Deep Neural Networks (DNNs), Recurrent Neural Networks (RNNs) and more, while providing the efficiency benefits of a neuromorphic design. It removes risks and simplifies.

At BrainChip, our mission is to Unlock the future of AI. We believe we can do this by enabling Edge devices with Akida, next-generation technology to advance the growth and smartness of these devices and ultimately provide end users with a sense of privacy and security, energy and cost savings while having access to new features. We realize to achieve our mission, we must make it easy for our end users (who may be experts or non-experts in the field of AI) to use our technology and support current solutions. BrainChip provides development boards of their reference chip AKD1000 for anybody in the community to use and build prototype modules. For commercial use, BrainChip provides licenses of the technology to get it integrated into a custom ASIC, board, or a module that can be used in millions of edge devices.

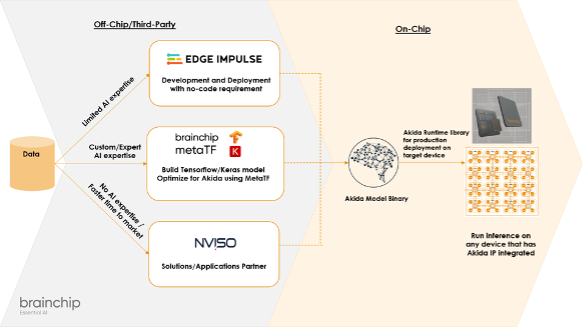

BrainChip promotes neuromorphic technology with proven silicon, like AKD1000, but also focuses on enabling end users to use the benefits of this technology with little to no knowledge of neuromorphic science. There are three ways to leverage Akida technology (refer to Figure 2) and deploy complex models:

Figure 2. BrainChip development ecosystem and access to deployment of solutions to Akida technology

1. Through BrainChip MetaTF™ framework: It is a unique and free robust ML framework that has Python packages for model development and conversion of TensorFlow/Keras models into Akida. It is a framework that is very popular among AI experts and custom developers. Python packages for MetaTF framework are public, and developers can access the framework here: https://doc.brainchipinc.com

2. Through Edge Impulse studio: It is a unique platform that provides end-to-end development and deployment of Machine Learning models on targeted technology with little to no-code AI expertise. Core functions of BrainChip MetaTF framework are embedded into Edge Impulse studio to deploy models onto Akida targeted silicon, such as AKD1000 SoC. To learn more about how to develop using Edge Impulse, visit https://www.edgeimpulse.com

3. Through Solutions Partners of BrainChip such as NVISO: BrainChip has partnered with solutions providers and enabled them to create complex models using MetaTF and build applications for specific and most common AI use cases such as Human Monitoring solutions. This allows for faster time to market solutions using Akida technology. To learn more about BrainChip Solutions Partners, contact us at sales@brainchip.com.

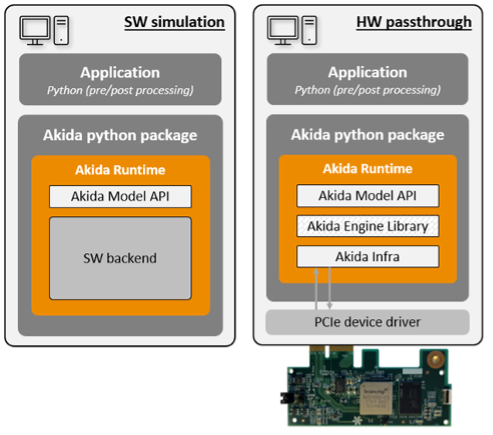

These avenues provide an opportunity to create and develop a functioning model that is suitable for running on Akida technology. The models are converted using MetaTF and are saved into a serialized byte file. These models can be evaluated offline by running simulations using the Software Runtime provided with MetaTF or can be evaluated on AKD1000 mini PCIe development board using Hardware backend as shown in Figure 3a. Once through the evaluation stage, these models can be deployed into production on any target device with Akida technology. Akida Runtime library, which is a low-level library that is OS agnostic, is used to compile the saved model and inference on any target device that has Akida technology. Customers who license Akida technology for their device are able to compile this Akida

Runtime library with any of their application software and host OS, as shown in Figure 3b.

BrainChip is very excited about the ecosystem that is available for our end users to use, develop, and deploy complex AI models on Akida neuromorphic technology. Expert AI developers who are familiar with CNN architectures can use BrainChip MetaTF framework to deploy familiar models on Akida. Developers with little to no code experience can use Edge Impulse studio to deploy models on Akida technology and users who want faster time to market can work with Solutions Partners such as NVISO.

To learn more about how you can harness the power of AI, request a demo or visit BrainChip.com.

Nikunj Kotecha is a Machine Learning Solutions Architect at BrainChip. With many years of experience and a strong programming background, Kotecha brings a passion for AI-driven solutions to the BrainChip team, with a unique eye for data visualization and analysis. He develops neural networks for neuromorphic hardware and event-based processors and optimizes CNN-based networks for conversion to SNN. Nikunj has an MS in Computer Science from Rochester Institute of Technology.