Right Sizing AI for Embedded Applications

By Anand Rangarajan

Director, End Markets, GlobalFoundries

By Todd Vierra

Vice President, Customer Engagement, BrainChip

We all know the AI revolution train is heading straight for the Embedded Station. Some of us are already in the driver’s seat, while others are waiting for the first movers to pave the way so we can become fast adopters. No matter where you are on this journey, one thing becomes clear: AI must adapt to the embedded application sandbox—not the other way around.

Embedded applications typically operate within a power envelope ranging from milliwatts to around 10 watts. For AI to be effective in many embedded markets, it must respect the power-performance boundaries of the application. Imagine your favorite device that you charge once a day. If adding embedded AI to a product means you now need to charge it every four hours, you are likely to stop using the product altogether.

This is where embedded AI fundamentally differs from cloud AI. In the cloud, adding more computations is often the default solution. But in embedded systems, the level of AI compute must be dictated by what the overall power and performance constraints allow. You can’t just throw more compute silicon at the problem.

There are two key approaches to scaling AI effectively for embedded applications:

1. Process Technology

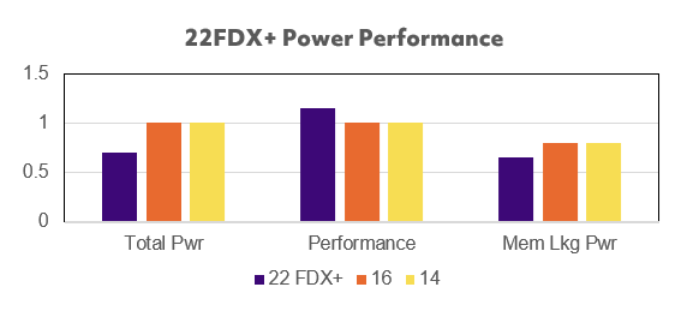

At the foundational level, advanced process technologies like GlobalFoundries’ 22FDX+ with Adaptive Body Biasing offer a compelling solution. These transistors can deliver high performance during compute-intensive tasks while maintaining low leakage during idle or always-on modes. This dynamic adaptability ensures that the overall power-performance integrity of the application is preserved.

2. Alternative Compute Architectures

Emerging architectures like neuromorphic computing are gaining attention for their ability to run inference at a fraction of the power—and with lower latency—compared to traditional models. These ultra-low-power solutions are particularly promising for applications where energy efficiency is paramount and real-time response is also important.

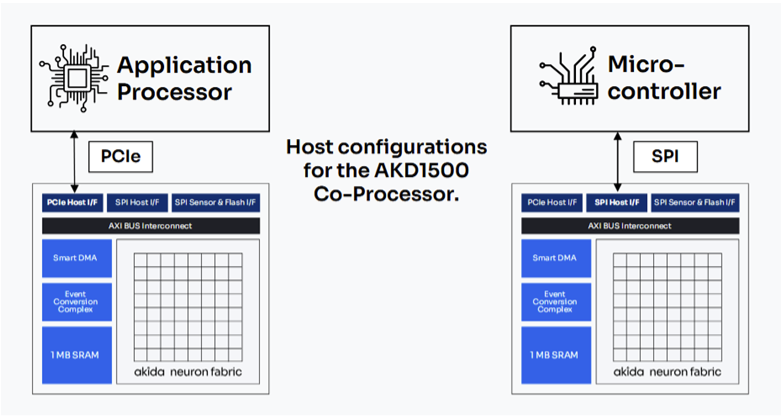

BrainChip’s AKD1500 Edge AI co-processor, built on GlobalFoundries 22FDX platform, demonstrates how neuromorphic design can make AI practical for the smallest and most power-sensitive devices. Powered by the company’s AkidaTM technology, the chip uses an event-based approach, processing only when there’s information thereby avoiding the constant compute cycles that waste energy by reading and writing to either on-chip SRAM or off-chip DRAM as in traditional AI systems. The co-processor performs event-based convolutions that leverage sparsity throughout the whole network in activation maps and kernels, significantly reducing computation power and latency by running as many layers on the Akida TM fabric. The diagram below shows all the interfaces, as well as the 8 Node Akida IP as the centerpiece of the AI co-processor.

The design further improves efficiency by handling data locally and using operations that cut power consumption dramatically. The result is a chip that delivers real-time intelligence while operating within just a few hundred milliwatts, making it possible to add AI features to wearable, sensors, and other AIoT devices that previously relied on the cloud for such capability.

The Akida low-cost, low-power AI co-processor solution offers a silicon-proven design that has already demonstrated critical performance metrics, substantially reducing risk for developers. With fully functional interfaces tested at operational speeds and proven interoperability across multiple MCU and MPU boards, the platform ensures seamless integration. The AKD1500 co-processor supports both power-conscious MCUs via SPI4 and high-performance MPUs through M.2 and PCIe interfaces, providing flexibility across many configurations. Enabling software development early with silicon prototypes accelerates time to market. Several customers have already advanced to prototype stages, validating the design’s maturity and readiness for deployment. As an example, Onsor Technologies’ Nexa smart glasses utilize the AKD1500 for low power inference to predict epileptic seizures, providing quality-of-life benefits for those suffering from epilepsy.

The best part of this is that the AKD1500 can be used with any low cost existing MCU with a SPI interface or an Applications processor where there is a PCIe connection available for higher performance. Adding the AKD1500 AI co-processor makes the time to market very short with available MCUs today.

Final Thoughts

As AI starts to sweep across the length and breadth of embedded space , right sizing becomes not just a technical necessity but a strategic imperative. The goal isn’t to fit the biggest model into the smallest device – it’s to fit the right model into the right device, with the right balance of performance, power, and user experience.