TENNs: A New Approach to Streaming and Sequential Data

One Trillion!

That’s the approximate number of deployed IoT devices that we will rely on in our daily lives by 2030. But we’re already into hundreds of billions today. Applications such as AI-assisted health care, advanced driver assistance (ADAS), speech/audio processing, vision systems in surveillance and monitoring are just a fraction of those that collect data from sensors that track our health, our communications, and our surroundings in real time.

With such a large number of devices and their data competing for resources in the Cloud, it’s not surprising that there are plenty of obstacles to growth. Cloud-based processing power and global connectivity is being overwhelmed by the demand. The energy and cost to deliver services like Generative AI is skyrocketing. The natural way to scale is with hybrid or distributed AI with more processing done at the Edge. In anticipation of these trends, BrainChip’s neuromorphic computing architectures have been designed to process increasingly complex neural networks on Edge devices with little or no connection to the Cloud.

Yet not all sensor-generated data is created equal. Some applications are easy to process at the Edge: medical vital signs, audio processing, anomaly detection in industrial applications, involve one dimensional (1D) streaming data. In contrast, multidimensional (spatiotemporal) data generated by applications using live-streaming cameras, radar, and lidar, for example puts much greater pressure on the limited computation resources on Edge devices.

Introducing TENNs

In March this year, we announced a new neural network architecture we call Temporal Event-based Neural Networks (TENNs). These are lightweight neural networks that excel at processing temporal data much more efficiently than traditional Recurrent Neural Networks (RNN) like Long Short-Term Memory (LSRM), or Gated Recurrent Units (GRU). These models often require substantially fewer parameters and orders of magnitude fewer operations to achieve equivalent or better accuracy compared to traditional models. The second generation Akida neural processor platform has also added extremely efficient 3-dimensional (3D) convolution functions that can therefore perform compute-heavy tasks on devices with limited memory and battery resources.

This feat is accomplished by combining the convolution in the 2D spatial domain with that of the 1D time domain, resulting in fewer parameters and fewer multiply-accumulate (MACs) operations per inference than previous networks. This reduced need for computation leads to a substantial reduction in the energy or power draw, making TENNs ideal for ultra-low-power Edge devices.

Specialized Processing

We have published our research about the advantages of TENNs when they are applied to spatiotemporal data like video from traditional frame-based cameras and events from dynamic vision sensors. TENNs can enable higher quality video object detection in tens of milliwatts.

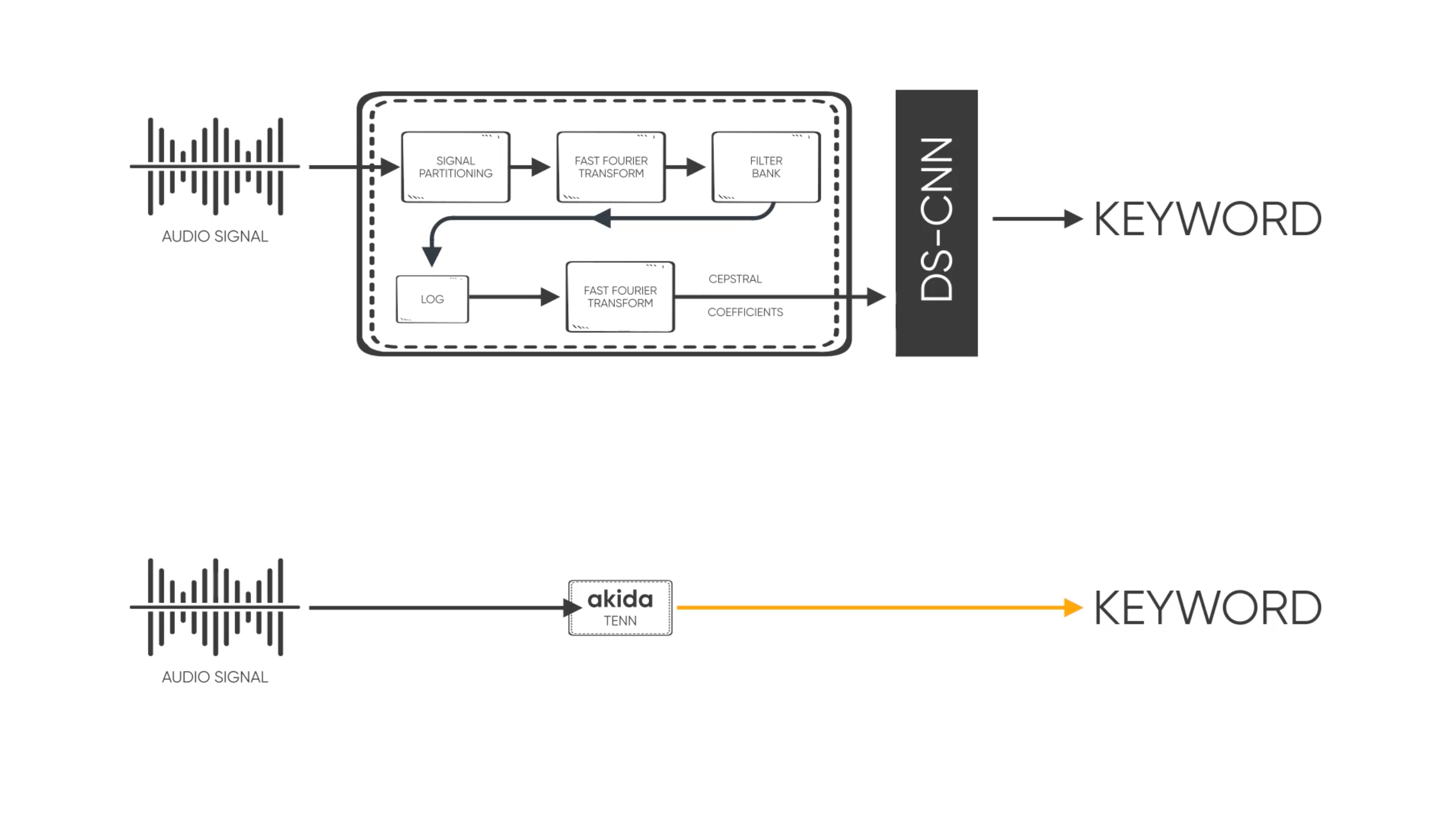

TENNs are equally suitable for processing purely temporal data, like raw audio from microphones and vital signs from health monitors. In these situations, TENNs can minimize the need for expensive DSP or filtering hardware thereby reducing silicon footprint, energy draw as well as Bill of Materials (BOM) cost. This clears the path for much more compact form factor devices such as more advanced hearables, wearables or even new, embeddable medical devices that can be sustained through energy-harvesting.

Since TENNs can operate on data from different sensors, they radically improve analytical decision-making and intelligence for multi-sensor environments in real-time.

The Akida platform still includes Edge learning capabilities for classifier networks. This complements TENNs to enable a new generation of smart, secure Edge devices that far exceeds what has been possible so far in power envelopes that are measured in milliwatts or microwatts.